Runner¶

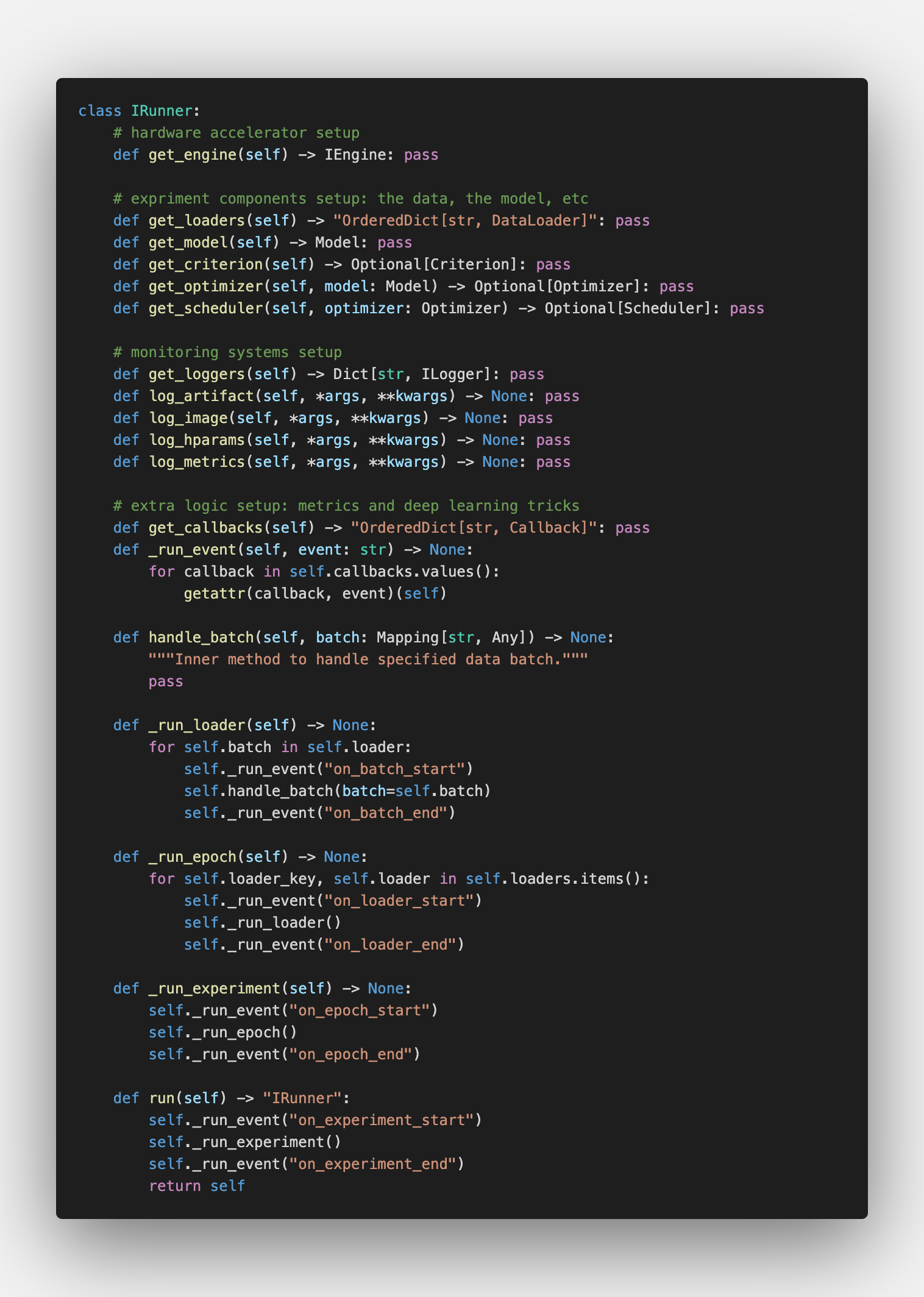

Runner is an abstraction that takes all the logic of your deep learning experiment:

the data you are using, the model you are training,

the batch handling logic, and everything about the selected metrics and monitoring systems:

The Runner has the most crucial role

in connecting all other abstractions and defining the whole experiment logic into one place.

Most importantly, it does not force you to use Catalyst-only primitives.

It gives you a flexible way to determine

the level of high-level API you want to get from the framework.

For example, you could:

Define everything in a Catalyst-way with Runner and Callbacks: ML — multiclass classification example.

Write forward-backward on your own, using Catalyst as a for-loop wrapper: custom batch-metrics logging example.

Mix these approaches: CV — MNIST GAN, CV — MNIST VAE examples.

Finally, the Runner architecture does not depend on PyTorch in any case, providing directions for adoption for Tensorflow2 or JAX support.

If you are interested in this, please check out the Animus project.

Supported Runners are listed under the Runner API section.

If you haven’t found the answer for your question, feel free to join our slack for the discussion.